As we’ve been researching the AI landscape & how to build applications, a few design patterns are emerging for AI products.

These design patterns are simple mental models. They help us understand how builders are engineering AI applications today & which components may be important in the future.

The first design pattern is the AI query router. A user inputs a query, that query is sent to a router, which is a classifier that categorizes the input.

A recognized query routes to small language model, which tends to be more accurate, more responsive, & less expensive to operate.

If the query is not recognized, a large language model handles it. LLMs much more expensive to operate, but successfully returns answers to a larger variety of queries.

In this way, an AI product can balance cost, performance, & user experience.

The second design pattern is for training. Models are trained with data (which can be real-world & synthetic or made by another machine), then they are sent for evaluation.

The second design pattern is for training. Models are trained with data (which can be real-world & synthetic or made by another machine), then they are sent for evaluation.

The evaluation is a topic of much debate today because we lack a gold standard of model greatness. The challenge with evaluating these models is the inputs can vary enormously. Two users are unlikely to ask the same question in the same way.

The outputs can also be quite variable, a result of the non-determinism & chaotic nature of these algorithms.

Adversarial models will be used to test & evaluated AI. Adversarial models can suggest billions of tests to stress the model. They can be trained to have strengths different to the target model. Just as great teammates & competitors improve our performance, adversarial models play will play that role for AI.

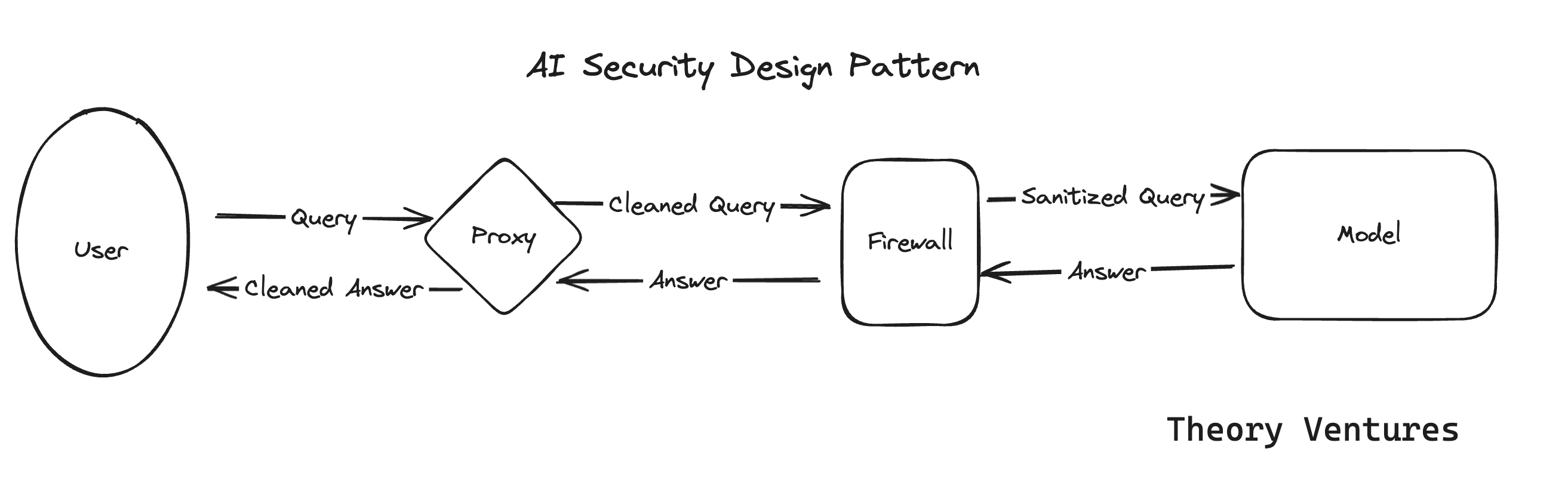

The core security around LLMs has two components. A user component, here it’s called a proxy, & a firewall, which wraps the model.

The proxy intercepts a user query both on the way out & on the way in. The proxy eliminates personally identifiable information (PII) & intellectual property (IP), logs the queries, & optimizes costs.

The firewall protects the model & the infrastructure it uses. We have a minimal understanding of how humans can manipulate models to reveal their underlying training data, their underlying function, & the orchestration for malicious acts today. But we know these powerful models are vulnerable.

Other security layers will exist within the stack, but in terms of the query path, these are the most important.

The last of our current design patterns in the AI developer design path.

The developer’s machine is secured with endpoint detection & response, or EDR, to ensure that the data being used to train models & the underlying models are not poisoned.

The developer’s code is sent to a CICD system. The CICD system checks the model & the data are correct using signatures (Sig Verification). Today, most softwares’ signatures are verified. But not AI models.

Also, the large language model will be subjected to a testing harness (a series of tests) to ensure that it performs as expected. Real user queries from live traffic will inform the harness.

Once those tests pass, the model is pushed to production.

These are our four current mental models for how large language models will be built, secured, & deployed. These are sketches of each leg of an elephant we are trying to draw in a dark room.

If you have ideas about other design patterns or improvements to the current ones, please contact us. We’d love to improve these to help others.