Two revelations this week have shaken the narrative in AI : Nvidia’s earnings & this tweet about Gemini.

The AI industry spent 2025 convinced that pre-training scaling laws had hit a wall. Models weren’t improving just from adding more compute during training.

Then Gemini 3 launched. The model has the same parameter count as Gemini 2.5, one trillion parameters, yet achieved massive performance improvements. It’s the first model to break 1500 Elo on LMArena & beat GPT-5.1 on 19 of 20 benchmarks.

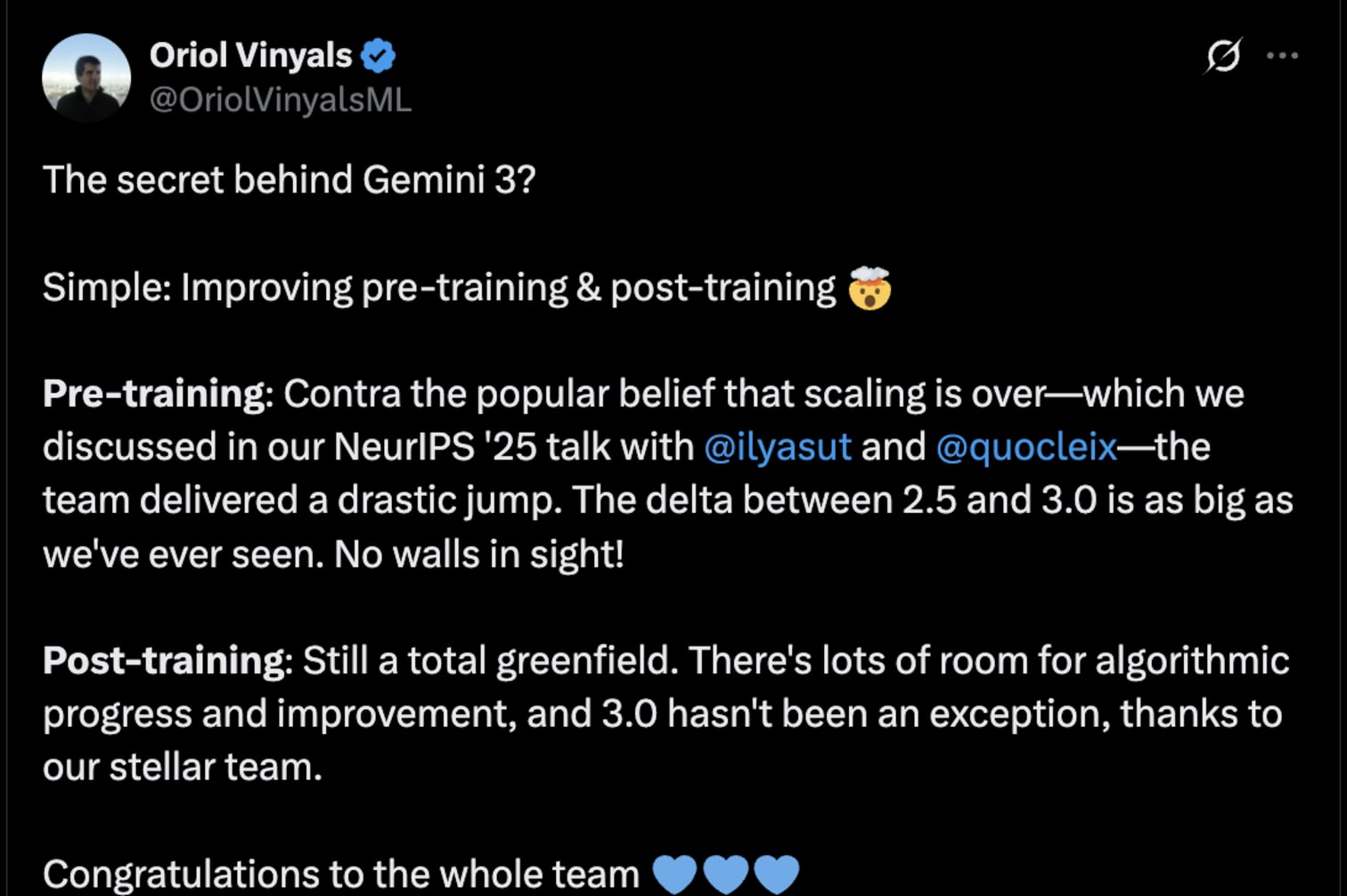

Oriol Vinyals, VP of Research at Google DeepMind, credited improving pre-training & post-training for the gains. He continued that the delta between 2.5 & 3.0 is as big as Google has ever seen with no walls in sight.

This is the strongest evidence since o1 that pre-training scaling still works when algorithmic improvements meet better compute.

Second, Nvidia’s earnings call reinforced the demand.

We currently have visibility to $0.5 trillion in Blackwell and Rubin revenue from the start of this year through the end of calendar year 2026. By executing our annual product cadence and extending our performance leadership through full stack design, we believe NVIDIA will be the superior choice for the $3 trillion to $4 trillion in annual AI infrastructure build we estimate by the end of the decade.

The clouds are sold out and our GPU installed base, both new and previous generations, including Blackwell, Hopper and Ampere is fully utilized. Record Q3 data center revenue of $51 billion increased 66% year-over-year, a significant feat at our scale.

The infrastructure is accelerating headlong into hundreds of billions next year & Nvidia predicts it will be in the trillions, citing “$3 trillion to $4 trillion in data center by 2030”.

As Gavin Baker points out, Nvidia confirmed Blackwell Ultra delivers 5x faster training times than Hopper.

Gemini 3 proves the scaling laws are intact, so Blackwell’s extra power will translate directly into better model capabilities, not just cost efficiency.

Together, these two data points dismantle the scaling wall thesis.